In a nutshell

- Dia-1.6B, a small, open-source AI model, claims to be better than Sesame or ElevenLabs at mental speech synthesis.

- Due to the complexity of human emotions and professional restrictions, it is still challenging to deliver compelling personal AI conversation.

- The “uncanny river” issue persists because AI voices sound people but fail to convey nuanced emotions, despite its strong opposition to competition.

An open-source text-to-speech model called Dia-1.6B, which claims to produce physically moving speech in the same way as Sesame and ElevenLabs, has been developed by Nari Labs. Despite having only 1.6 billion parameters, the design is extremely tiny, yet it still manages to produce realistic dialogue that includes coughs, tears, and personal inflections.

It you cry in awe.

We recently fixed text-to-speech AI.

This model is model great screams, emotion, and alarm.

Obviously defeats Sesame and 11 laboratory.

— it’s just 1.6B params

— channels online on a single Nvidia

— created by a group of 1.5 people in Korea! !It’s called Dia by Nari Labs. photograph. twitter.com/rpeZ5lOe9z

— Deedy ( @deedydas ) April 22, 2025

Even though that may not seem like a particularly technical achievement, OpenAI’s ChatGPT is perplexed by its response,” I doesn’t cry but I can certainly talk up,” the robot replied when asked.  ,

Some AI types may cry, however, if you ask them to. However, it’s not something that occurs naturally or obviously, which is, allegedly, Dia-1. 6B’s super power. It comprehends that a cry is correct in some circumstances.

On an Nvidia A4000 running in real-time on a single GPU with 10GB of VRAM, Nari’s type processes about 40 currencies per minute. Dia-1. 6B is easily accessible under the Apache 2.0 certificate through Hugging Face and GitHub libraries, in contrast to more popular closed-source options.

” One crazy goal is to create a TTS type that rivals the ElevenLabs Studio, NotebookLM Podcast, and Sesame CSM. Somehow we managed to pull it off,” Toby Kim, co-founder of Nari Labs, posted on X to announce the unit. Comparing Dia to her competitors, who frequently flatten delivery or completely skip verbal tags, does better with regular dialogue and nonverbal expressions.

The Artificial race to create emotion

Artificial platforms are progressively making their text-to-speech models emotion-sensitive, which addresses a shoddy point in human-machine connection. However, they are not excellent, and the majority of the models, whether open or closed, tend to have an amazing river effect that lessens user experience.

We have compared and tried a few different platforms that deal with this particular subject of personal speech, and the majority of them are very good as long as users adopt the correct mindset and understand their limitations. Nevertheless, the technology is still far from encouraging.

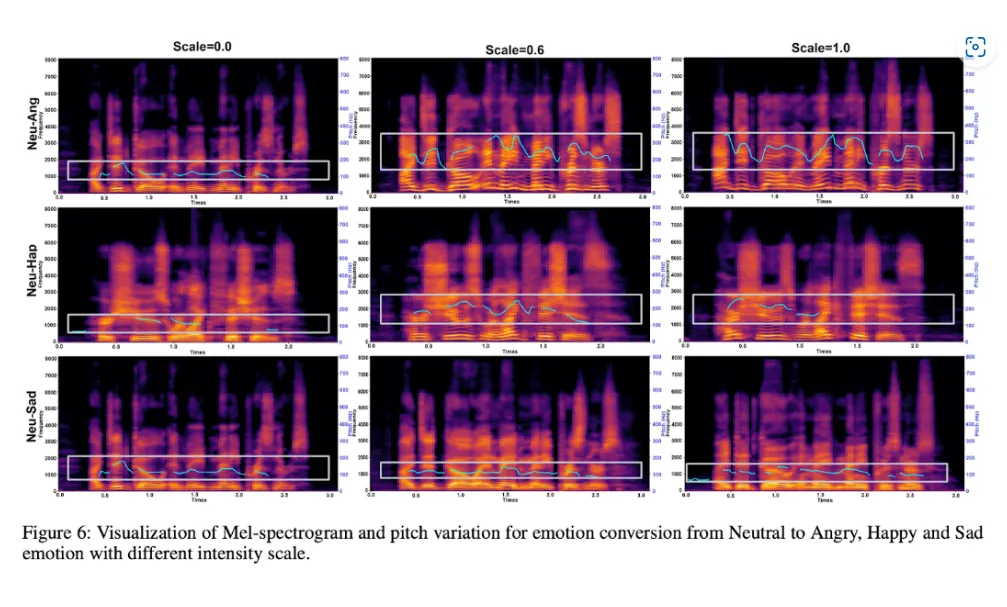

Scientists are using a variety of methods to solve this issue. Some train models to work with personal labels on datasets, enabling AI to understand the sound patterns associated with various emotional states. Others analyze cultural cues using deep neural networks and significant language models to create appropriate emotional tones.

One of the industry leaders, ElevenLabs, attempts to view emotional context straight from text input by examining language cues, sentence structure, and punctuation to deduce the appropriate personal tone. One Multicultural v2, its main product, is renowned for its rich emotional resonance across 29 different languages.

OpenAI also recently released “gpt-4o-mini-tts” with personalized personal appearance. In demonstrations, the company made the service available to developers by charging 1.5 cents per minute and highlighting the ability to describe emotions like “apologetic” in scenarios involving customer support. Although its state-of-the-art Advanced Voice mode does a great job of mimicking human emotion, it was unable to pass our tests against Hume’s inflated and passionate mode.

Dia-1. 6B might have some innovative ideas in how it handles visual communications. The model adds a layer of realism that is frequently absent in standard TTS outputs by combining laughter, coughing, and throat clearing when triggered by specific text cues like” (laughs )” or “( coughs ).”

Another notable open-source projects are Orpheus, known for ultra-low delay and vivid personal appearance, and EmotiVoice, a multi-voice TTS website that supports emotion as a stable style factor.

It’s challenging to become human.

But why is speaking in personal terms so difficult? After all, AI types have long since stopped feeling mechanical.

It seems as though naturality and emotions are two distinct dogs. A model can sound people and have a compelling, smooth voice, but it is utterly incapable of presenting emotion beyond easy narration.

” Personal speech production is challenging in my opinion because the information it relies on lacks psychological granularity. Most education datasets get speech that is simple and understandable, but not particularly expressive, according to Kaveh Vahdat, CEO of RiseAngle, an AI video production company. ” Emotion is more than just tone or level; it is environment, pacing, stress, and hesitation.” These characteristics are frequently inherent and are not used in a manner that devices can learn from.

” Even when feeling tags are used, they tend to flatten the difficulty of real people affect into broad categories like “happy” or “angry,” which is far from how feeling really functions in speech,” Vahdat argued.

Dia is actually good enough, and we tried it. It produced about one second of audio per second of inference, and while it does convey tone, it doesn’t feel believable because it was so exaggerated. And this is where the whole issue lies: models lack such strong contextual awareness that it is difficult to separate a single emotion from another without adding any additional cues and make it coherent enough for people to accept that it is a part of a natural interaction.

The “uncanny valley” effect presents a particular challenge, as synthetic speech cannot make up for a neutral robotic voice by adopting a more sentimental tone.

Additionally, there are numerous more technical challenges. When speakers aren’t included in their training data, AI systems frequently perform poorly in speaker-independent experiments, which is a problem known as low classification accuracy. Significant computational power is required to process emotional speech in real-time, which limits its use on consumer devices.

Significant challenges face data quality and bias. Large, diverse datasets capturing emotions across demographics, languages, and contexts are necessary for training AI for emotional speech. Systems trained in a particular group may perform worse than others; for instance, AI trained primarily in Caucasian speech patterns might struggle with other demographics.

Some researchers contend that AI lacks consciousness, making it impossible to truly mimic human emotion. This is perhaps the most fundamental point. AI can model emotions based on patterns, but it lacks the empathy and lived experience that people bring to emotional interactions.

Guess it’s harder than it seems to be to be human. Sorry, ChatGPT

Generally Intelligent Newsletter

A generative AI model called Gen narrates a weekly AI journey.