In a nutshell

- Open Source software is proving to be able to produce regular videos that last moments, challenging current near alternatives, in real time.

- SkyReels-V2 breaks game length limitations with its “diffusion forcing framework,” which enables infinite-duration Artificial video era while maintaining constant quality throughout.

- By cleverly compressing older images, FramePack uses only 6GB of VRAM to produce minute-long videos at 30 frames per second. This is the first time long AI video technology is done for consumer electronics.

Closed-source behemoths are being given a run for their money as open-source movie producers heat up.

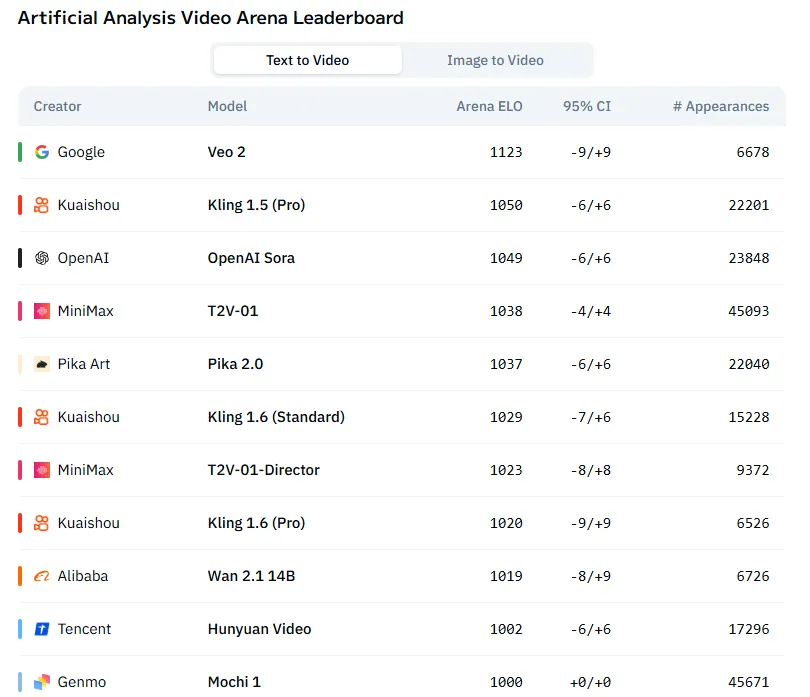

With three models ( Wan, Mochi, and Hunyuan ) coming in at the top 10 out of all AI video generators, they are more customizable, less restricted, uncensored, even free to use.

The most recent innovation extends video’s duration beyond the usual some moments, with two new models demonstrating the ability to produce content that lasts minutes rather than seconds.

In fact, the release of SkyReels-V2 this month claims that it can produce scenes with potential eternal period while maintaining consistency throughout. Customers with lower-end equipment can make lengthy videos with Framepack without burning their PCs.

Eternal Video Generation SkyReels-V2:

SkyReels-V2 addresses four crucial issues that had previously been unaddressed by earlier models, making it a significant advance in video technology technology. It refers to its system as an” Infinite-Length Film Generative Model,” which synergizes various AI technologies.

The design accomplishes this by enabling seamless video content expansion without obvious length limitations, as defined by its creators ‘ “diffusion forcing framework.”

It works by cooling on the final images of earlier produced material to produce fresh parts, preventing quality degradation over prolonged patterns. In other words, the unit decides what will happen next by carefully selecting the final frames it’s going to use, ensuring clean transitions and consistent value.

This is the main reason why video producers typically stick to little, 10-second movies, any more, and the resultant generation’s tendency to lose consistency.

The outcomes are really amazing. Developers and enthusiasts post videos to social media showing that the design is actually fairly clear, and the images don’t reduce quality.

The long images ‘ subjects are always present, and the backgrounds don’t warp or offer any potentially obstructive objects.

A fresh captioner that combines knowledge from general-purpose language versions with specific” shot-expert” designs to ensure exact alignment with visual terminology is included in SkyReels-V2. This is one of many modern pieces. This enables the system to comprehend and use expert film techniques more effectively.

The technique uses a multi-stage coaching pipeline to gradually increase resolution from 256p to 720p, ensuring consistent physical coherence while maintaining resolution. The team used reinforcement learning specifically designed to improve healthy movement patterns in order to improve motion quality, a continual issue in AI video generation.

The design can be tried at Skyreels. AI. Users pay an annual subscription starting at$ 8 per month for the sake of producing just one video, with the rest being limited to just one.

However, those who want to work it directly does require a PC with a God-tier reputation. The team states on GitHub that” a 540P video generated using the 1 / 1B model requires approximately 14.7GB top Chipset, while a 14B video with the same quality requires approximately 51.2GB peak Chipset.”

FramePack: Setting Priority on Performance

Potato Computers users may also be rejoiced. There is also something for you.

FramePack takes a unique approach to Skyreel’s approach, focusing on effectiveness rather than just duration. When optimized, using FramePack nodes allows for impressive window generation rates of just 1.5 seconds per framework while only requiring 6 GB of VRAM.

The bare minimum GPU memory needed to run a 1-minute video ( 60 seconds ) at 30 frames per second ( 1800 frames ) on a 13B model is 6GB. ( Yes, 6 GB, not a typo. The research team stated in the program’s established GitHub repo that “laptop GPUs are acceptable.”

With the small hardware requirement, consumer-grade GPUs may be able to revolutionize Artificial video technology, enabling the development of new generation capabilities.

With a sizable type size of only 1.3 billion parameters ( compared to tens of billions for other models ), FramePack could be used to support edge device deployment and wider use in all sectors.

Scientists at Stanford University created FramePack, which was developed by them. Lvmin Zhang, a dev-influencer behind numerous open-source resources for AI artists like the various Control Nets and Circuit Lights nodes, who made the SD1.5/SDXL age agnostication known as illyasviel, was a member of the team.

A smart memory compression program that prioritizes frames based on their significance is FramePack’s essential development. The system allocates more computing resources to current frames while gradually compressing older ones rather than treating all previous frames likewise.

Using FramePack networks under ComfyUI, the console that generates clips directly, yields very good results, especially given how little hardware is needed. SOTA models that offer excellent quality but significantly degrade when users push their limits and lengthen videos to more than a few seconds have been produced by enthusiasts who have produced 120 seconds of continuous video with little errors.

The official GitHub repository for Framepacks allows local installation. The team made it clear that the project has no official website and that no other URLs that use its name are fake websites that are not connected to the project.

The researchers advised users to “do not pay money or download files from any of those websites.”

The advantages of FramePack include the ability to train in small groups, higher outputs as a result of “less aggressive schedulers with less extreme flow shift timesteps,” consistent visual quality throughout lengthy videos, and compatibility with existing video diffusion systems like HunyuanVideo and Wan.

edited by Josh Quittner and Sebastian Sinclair

Generally Intelligent Newsletter

A generative AI model’s voiceover for a weekly AI journey.