Good news for AI engineers and hobbyists: Nvidia only made it a bit cheaper to construct AI-powered robots, drones, bright cameras and other gadgets that need a head. The company’s newest Jetson Orin Nano Super, which was unveiled on Tuesday and is now obtainable, has more processing power than its predecessor and costs$ 249 more.

The palm-sized machine delivers a 70 % performance increase, reaching 67 trillion businesses per minute for AI things. That’s a significant leap from earlier models, particularly for powering stuff like bots, computer vision, and automation software.

This is a brand-new Jetson Nano Super. About 70 trillion businesses per minute, 25 w and$ 249″, Nvidia CEO Jensen Huang said in an official picture reveal from his house. ” It runs all the HGX does, it also runs LLMs”.

Memory speed even got a major upgrade, increasing to 102 gigabytes per minute, 50 % faster than the previous generation of the Jetson. This improvement enables the device to process data from up to four cameras at once and process more difficult AI models at once.

The system includes a 6-core Shoulder processor and Nvidia’s Ampere architecture GPU, which allows it to run several AI applications simultaneously. This gives developers the possibility to work with more complex competences, like building smaller models for robots capable of things like modeling environment, image recognition, and voice commands with minimal processing power.

Existing Jetson Orin Nano entrepreneurs are also not left out in the cold. In order to improve the efficiency of its older AI chips, Nvidia is releasing software changes.

The statistics behind Nvidia’s fresh Jetson Orin Nano Super reveal a fascinating plot. With only 1, 024 CUDA core, it looks reasonable compared to the RTX 2060’s 1, 920 components, the RTX 3060’s 3, 584, or the RTX 4060’s 3, 072. But natural primary matter doesn’t tell the full story.

While gaming Graphics like the RTX set drink between 115 and 170 watts of power, the Jetson sips a simple 7 to 25 volts. That’s about one-seventh the energy use of an RTX 4060—the most useful of the number.

The statistics on memory bandwidth ink a similar photo. The Jetson’s 102 GB/s may seem underwhelming next to the RTX cards ‘ 300+ GB/s, but it’s tailored specifically for AI workloads at the top, where useful data processing matters more than natural capacity.

That said, the true magic happens in AI efficiency. The device produces 67 TOPS ( trillion operations per second ) for AI tasks, which is difficult to directly compare to RTX cards ‘ TFLOPS because they measure various types of operations.

Running native AI chatbots, processing several cameras feeds, and controlling robots can all be done on a power budget that would be insufficient to run a gaming GPU’s cooling fan, so it’s basically neck-and-neck with an RTX 2060 at a fraction of the cost and power consumption.

Although 8GB of shared storage may seem low, it indicates that it is more worthy than a typical RTX 2060 when it comes to running native AI models like Flux or Stable Diffusion, which may cause those GPUs to experience an “out of storage” failure or delegate some of the work to regular RAM, thereby reducing the inference time, which is essentially the Artificial thinking process.

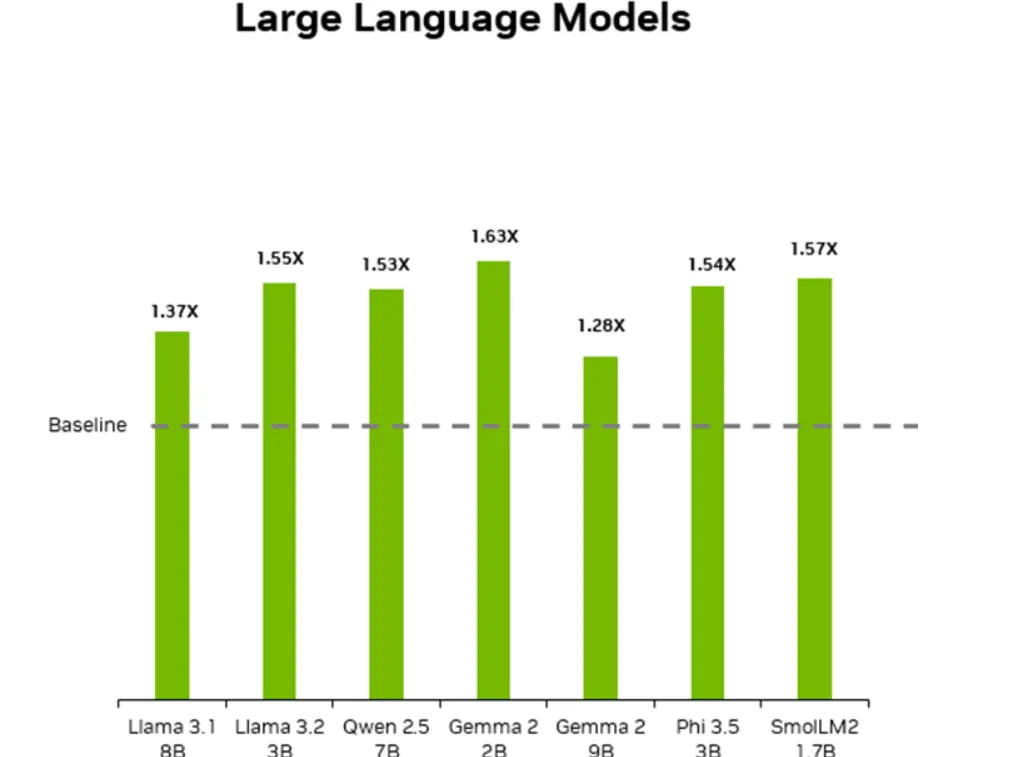

The Jetson Orin Nano Super also supports various small and large language models, including those with up to 8 billion parameters, such as the Llama 3.1 model. When using a quantized version of these models, it can generate tokens at a rate of about 18 to 20 per second. A bit slow, but still good enough for some local applications. Still, it improves the hardware models for Jetson AI from the previous generation.

Given its price and characteristics, the Jetson Orin Nano Super is primarily designed for prototyping and small-scale applications. The device’s capabilities may seem limitless in comparison to higher-end systems that cost significantly more and require a lot more power for power users, businesses, or applications that require extensive computational resources.

Generally Intelligent Newsletter

A generative AI model’s generative AI model, Gen, tells a weekly AI journey.