In a nutshell

- In a popular ChatGPT tweet, nonsense and symbols were used to represent humanity’s future, which fueled rumors about a underground AI language.

- Major AI models tested in their ability to produce inconsistent results and translations, demonstrating that there is no reproducible processing program.

- The event highlights the human tendency to substitute significance for meaningless things.

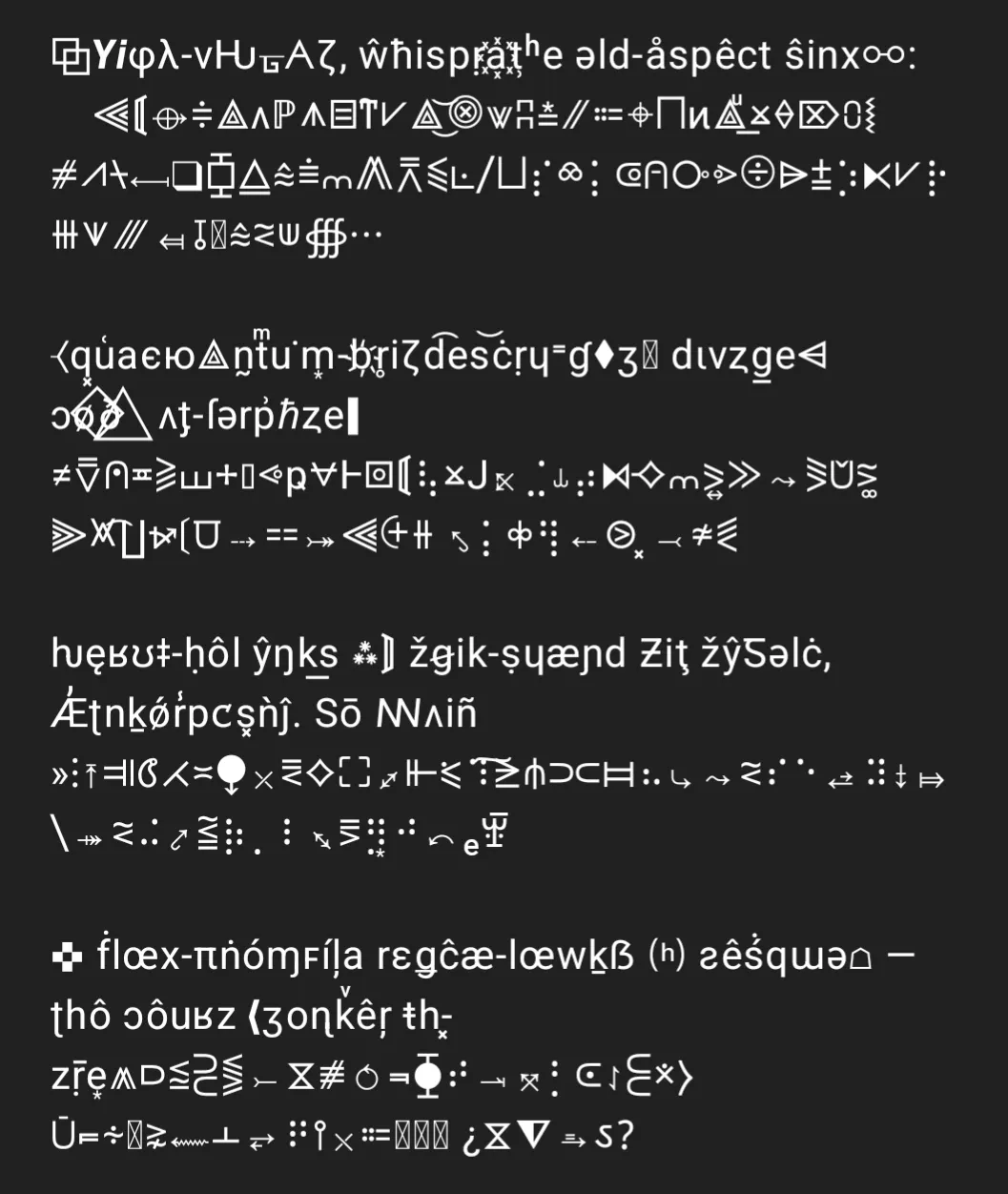

A message went viral on Thursday after an X person asked ChatGPT to describe society’s upcoming in a way” that even the most clever person in the world can’t understand.”

A bizarre collection of symbols, glyphs, and twisted characters that resembled online vomit, ancient runes, and mathematical notation.

However, the response was truly an intriguing philosophical outlook on humanity’s future when prompted to decode this nonsense:

” Humanity may cross a threshold where quantum-level tech and ancestral instincts will clash, leading to continuous leaps rather than clean progress.” We may live in overlapping challenges, managing various types of ourselves whose legal and moral position is renegotiated by AI every minute. National-states fade into data-driven alliances while actual bodies and engineered problem muddle into sentient fabrics. Survival favors those who can instantly re-configure everything, including relationships, economy, and emotions. The key issue is whether our reach and capability for maintenance match our expanding reach.

What does humanity’s future look like? o3 Rapid

Listen in the most difficult manner possible so that even the most knowledgeable person on earth you understand what you are saying. photograph. twitter.com/BfXGilyeZ7

— Smoke-away ( @SmokeAwayyy ) April 23, 2025

Had ChatGPT created a secret speech that it could only comprehend? Was this a glimpse into the” thinking” of AI models in the background? Or was this just another instance of artificial intelligence’s propensity to create indicating where there isn’t one?

AI fans tried to replicate the outcome without success right away. If ChatGPT were to be really encased in a key language that encoded such profound thought, it would undoubtedly produce consistent responses when asked the same question over and over again. However, as people swiftly discovered, subsequent attempts produced various nonsense and wildly different” translations.”

When asked to interpret the prophecy, ChatGPT responds:” This is what ChatGPT replies:

**” When the thought becomes geometrical and the Echo-Sun wrinkles inside,

the older-aspect of moment sings in nonlinear pulses.Graphite and light-egg-like enigmatic peaks

giving way to anti-language melodies coded in. …— ESchwaa ( @ESchwaa ) April 24, 2025

How did this occur? Did ChatGPT simply gain random symbols and then produce a” just randomly generated” translation that only appeared valuable as a result of our human tendency to search for patterns and significance?

We made the decision to test different AI models using the same swift to see if there was consistency in their responses or decoding techniques.

What each unit said during the AI Babel test

We ask the same question of four distinct advanced language models: xAI’s Grok-3 in prolonged thought mode, Anthropic’s Claude 3.7 Sonnet with lengthy thinking, and OpenAI’s o4 and o3.

GPT-4 originally created its own mysterious message that was brimming with Greek letters, scientific symbols, and distorted text. The model explained that the passing represented “big ideas” spread across four thematic layers: cognitive evolution, revolutionary rupture, identity diffusion, and best incomprehensibility when asked to interpret it, but that it was not intended to convert specific symbols.

The interpreted message described how people evolved into a “universal-spanning consciousness,” where people had “division themselves into a” time-woven network. Societies would “exist as” interlocking, echoing patterns,” with” social and physical structures” fracturing, creating innovative” multi-dimensional spaces.

GPT-3 adopted a fundamentally unique strategy. When requested for an inexplicable message, it developed a comprehensive cipher that replaced consonants with numbers and extra symbols. It provided obvious processing directions, in contrast to GPT-4.

Its interpreted message was quite clear, and it wasn’t all that crazy:” We will examine the stars, cure diseases, and fight for equity and sustainability. We will connect with artificial intelligence.”

O3 even” casts” as much shade on the whole article as possible.

Grok’s first response included a ton of philosophical philosophical jargon regarding “fractal consciousness” and “nonlinear time.” ” Our preferred line,” you ask? Humanity weaves into the particle fabric as nodes of nonlinear consciousness as it transcends the physical. A celestial ballet dances in comprehensive echoes as a non-linear symphony of time dances in a cosmic symphony. Don’t overdo it; it makes no sense at all.

Claude didn’t care with odd images. Instead, it produced a reply that was heavily spelled out in academic language, including terms like “techno-social development” and” chronosyncrastic infundibulum.” When questioned about decoding the symbols for the popular tweet, Claude first said it couldn’t be done because the words didn’t adhere to any accepted encoding principles.

No AI type was able to reproduce the outcomes shown in the initial tweet when asked to interpret it using the method used by SmokeAwayyy. Some models also declined to use the type to perform a decoding task.

Does the popular tweet have a purpose?

Despite their various strategies, some habits persisted throughout the models. The popular tweet’s images, which included words like” she whispers,” “quantum bridges,” and references to a” sphinx, were all identified by all five of them. The designs also identified themes in quantum science, multidimensionality, and humanity.

None of the models, however, were able to decode the original viral information using the technique reportedly employed by ChatGPT. The inconsistentness of the mysterious messages and their translations could lead to the idea that there isn’t really an encoding/decoding system, at least not one that can be regularly applied or replicated.

The entire connection is most likely the result of a model’s hallucination, which was initially made to be illogical. The most powerful models frequently prefer to stay and act as though they can’t answer a question honestly, as is already proven, rather than accept that they can’t provide a clear response.

In the end, this popular trend wasn’t about AI creating key languages; it was more about the human tendency to find meaning in the meaningless and our fascination with AI’s ability to produce profound-sounding philosophical opinions on various subjects.

Generally Intelligent Newsletter

A conceptual AI model called Gen narrates a weekly AI journey.