The UK Ministry of Justice has been slowly creating an AI system that resembles something from the sci-fi movie” Minority Report,” a program intended to determine who may commit murder before they have done anything wrong.

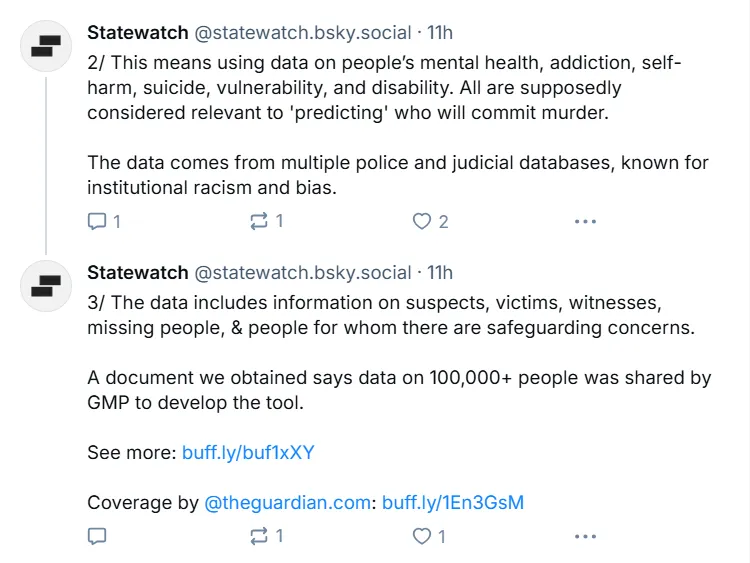

The technique uses sensitive personal information that has been sifted out of police and criminal databases to identify potential killers, according to information released by watchdog group Statewatch on Tuesday. The UK program officially relies on AI to interpret and report citizens by sifting through a large amount of data, including mental wellness records, addiction histories, self-harm reports, suicide attempts, and illness status, rather than using teenage psychics floating in pools.

The Greater Manchester Police shared data on 100, 000+ people in a file we obtained to create the tool, Statewatch revealed on social media. The watchdog claimed that the data comes from a number of police and criminal databases, which are notorious for administrative racism and bias.

Statewatch is a nonprofit organization established in 1991 to track the development of the EU’s position and civic liberties. Investigative editors, lawyers, experts, academics, and scientists from over 18 nations have become its members and contributors. According to the business, Freedom of Information requests were used to obtain the papers.

Sofia Lyall, a scholar for Statewatch, said in a statement that the Ministry of Justice’s attempt to create this death projection system is the most recent cold and futuristic illustration of the government’s desire to develop so-called crime “prediction” systems. The Ministry of Justice may stop this crime projection tool’s development right away.

The government may invest in truly helpful welfare services in place of spending money on developing sketchy and racist AI and algorithms. Making security cuts while investing in “temporary fixes” by techno-solutionists will only further harm people’s health and well-being, according to Lyall.

The depth of the data being collected, which includes data on suspects, sufferers, witnesses, missing persons, and people with supporting concerns, was outlined by Statewatch’s revelations. In a report, it was specifically stated that “health data was deemed to have” significant predictive strength for identifying possible murderers.

Of course, the word about this AI tool rapidly gained traction and attracted significant condemnation from experts.

Governments must stop getting their inspiration from Hollywood, according to business consultant and director Emil Protalinski, while the official account of Spoken Injustice cautioned that” AI didn’t mend injustice, it may make it worse.”

Also AI appears to be aware of the potential devastation. The UK’s AI death projection tool is a startling step in the direction of” Minority Report,”” Olivia, an AI broker” expert” on policy making wrote earlier on Wednesday.

The discussion has sparked debates about whether or not such devices will always function morally. ‘s Alex Hern, a researcher with artificial intelligence, highlighted the complex nature of technology concerns. I’d prefer more of the people who oppose this to know whether the objection is “it won’t work” or “it may work but it’s still awful,” he wrote.

This is not the first time lawmakers have attempted to determine acts using artificial intelligence. Argentina, for instance, sparked controversy last year when it claimed to be developing an AI system capable of identifying acts before they occur.

The AI-powered Crime Nabi software from Japan, which was developed by the Igarapé Institute, claims to have helped reduce crime in Rio de Janeiro test areas by up to 40 %, while Brazil’s CrimeRadar application, developed by the Igarapé Institute, has received a warmer welcome.

South Korea, China, Canada, the UK, and even the United States are examples of nations using artificial intelligence to identify crimes. The University of Chicago claims to have a model that can identify” competitive crimes” one month in advance with about 90 % accuracy.

The Ministry of Justice has not formally acknowledged the program’s full range or addressed fears about possible discrimination in its systems. It is still unclear whether the program has advanced past the growth stage to the point of deployment.

Generally Intelligent Newsletter

A conceptual AI model’s relational AI model, Gen, tells a regular AI journey.